(github)

(github)

The MNIST database of handwritten numbers is perhaps the most prolific dataset in machine learning. Given a set of handwritten numbers and the digit they are depicting, a neural network can learn to read handwritten numbers it has never even seen before with astounding accuracy.

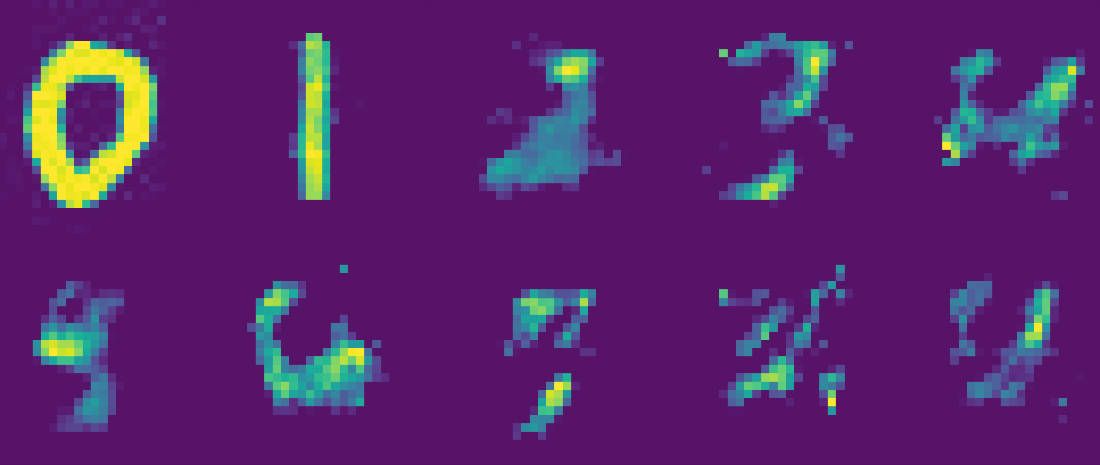

As a first jump into making neural networks, I wanted to turn this basic task in on itself; bend the formulaic job of categorization into a more creative one. This neural network, "CountSheep", is trained to take in a hand drawn digit and generate a hand drawn image of the next number in sequence.

And when we feed this depiction back into the network again, so that it draws based on its own drawing, it quickly spirals into abstraction and introspection as each error and imperfection is reinterpreted. As if beginning to doze off from counting sheep, the network begins to drift into nonsense and sudden ghastly semblances of meaning.

But... what does this little network show us? From a practical sense, it affords us very little. While Optical Character Recognition (OCR) and general image classification is an important field, with applications ranging from mass archival work, to self-driving cars, what we have here is Optical Character Generation. And at that, a very limited example, as CountSheep can only count upwards the digits from 0 to 9 in a loop. On top of that, the network is very small, containing only one hidden layer with 32 hidden nodes.

Yet this inversion from recognition to generation is, to me, somehow instantly more gripping than even an objectively more complex neural network performing ludicrously accurate OCR. I am reminded of Searle's Chinese Room, something I am not wont to do, as I am rather ambivalent about its message. In Searle's thought experiment, there exists a person inside of a room with a dictionary of foreign phrases and their corresponding responses. The person inside the room receives inputs from the outside world, and must find, match, and output the response. Searle's point is that such a person in the room could perform the task accurately, even mime conversation, but would not understand the content the same way a native speaker of the language would, much like a philosophical zombie could mime behavior. And as such, if the person and dictionary in the room were replaced with a simple dictionary program (or a neural network), this machine would not understand what it was doing either. There's no cognition happening in the space between perceiving and acting.

Say we were to mirror this thought experiment, and rewire CountSheep not to feed back into itself, but to take in our input drawings and output its own drawings. CountSheep is no longer talking to itself, but, in a sense, playing a game with us. A simple counting game, but a game nonetheless, much like Searle's room's conversation. We give it a number, and it shows us the next number. Or... it tries to, anyway.

The black box we're dealing with is transparent (in fact, we made it), the task is absurdly limited, and the answers produced are often incorrect. But in spite of knowing all this, even in the patently incorrect responses from the network, there's still something there that feels, to me at least, meaningful.

And that's because there is meaning. Firstly, there is a wealth of meaning from the drawings the machine is trained on. Human made, hand drawn numbers encode a lot more than simply the digits from 0 to 9. They encode subtle reflections about the nature of how we write, and why we write the way we do. While more symbolic than tally marks, Latin numerals were not designed arbitrarily, and we do not write them arbitrarily. If we did, we would expect the digits to look like a Pollock: streaks of random lines and dots.

Latin numerals must be read and written, and for that, they encode a balance between mechanical efficiency and visual distinctness. Even deeper speculations can be made about the influence of anthropology, psychology, biology... but the unifying theme is this: because they are human made, they reflect humanity, and so we should not be surprised these strange distorted images feel meaningful. In the language of Searle's thought experiment, I like to think of this as Underestimating the Dictionary, understating the meaning embedded in the inanimate. Depending on the complexity of the task Searle's room must accomplish, the dictionary must match the complexity of the task, which may become exponentially and non-trivially enormous. In his initial premise, a book that contains the 'correct' response to every phrase in a language would have to be dynamically changing, Turing complete in a way. At which point it starts to not look like a dictionary at all.

The second meaningful component of CountSheep is its ability to output, to produce, to act, in a wide, varying range. After all, we are creatures of both perception and action, with complex motor cortices along with our complex sensory cortices. And while CountSheep is limited to its 28x28 pixel output, with each pixel a value from 0 to 255, the possibility space of output is still 256(28*28), higher than the Shannon Number of possible chess games. Rather than condensing and distilling our deep well of encoded, meaningful knowledge, CountSheep is throwing it back and re-expressing it. You can teach a potted plant a language until you're blue in the face, but it can only really learn as much as it can show you it has learned.

I feel once again like I am overselling this very simple program. At the end of the day, its behavior can be surmised as learning to regurgitate frankenstinian depictions of numbers. However, when you begin to describe it as something that learns to do things from what it observes, it begins to sound less like a machine and a lot more like you or me. Input and output starts to sound more like perception and action, like interaction. Searle's point is that if we are trying to find cognition in a machine the same way we find it subjectively in ourselves, we will have no luck. But if we move towards cognition from opposite ends, flank and sneak up on it from the both ends of perception and action, we won't find nothing... and if we do, then like Dorothy, we may find that the cognition was within our perception and action the whole time (an essay for another time).

There is an undeniably enormous gulf of difference between a dictionary program and a person. Some think it so insurmountable as to consider them two separate continents entirely. But in this gray area between the two has to be something, and CountSheep and our Doodle Brains are pointing us in that direction.